Getting recurring feedback from the end users of your product is a critical component of iterative design and development. During a usability test, designers ask participants to think out loud while they complete tasks using a prototype or test site. This method allows teams to identify and validate assumptions about how users will interact with their product or service, ensure that the product or service is user-centered, and improve inclusivity and accessibility by engaging directly and frequently with end users, particularly those from less-represented communities.

This toolkit is the first in a series of two focused on usability testing. This toolkit covers all of the steps that lead up to conducting a usability test, while the second toolkit in the series covers the steps involved in implementation, synthesis, and analysis. The process described here was developed and streamlined through Nava’s work with the Commonwealth of Massachusetts’ Paid Family and Medical Leave program.

A usability test is: A user research method used to identify problems and opportunities in design by observing participants as they attempt to complete defined tasks on a prototype or interface.

This toolkit can help you:

- Understand the differences between a moderated and unmoderated usability test and determine which one is most appropriate

- Prepare your materials

- Recruit participants

Determine your test type

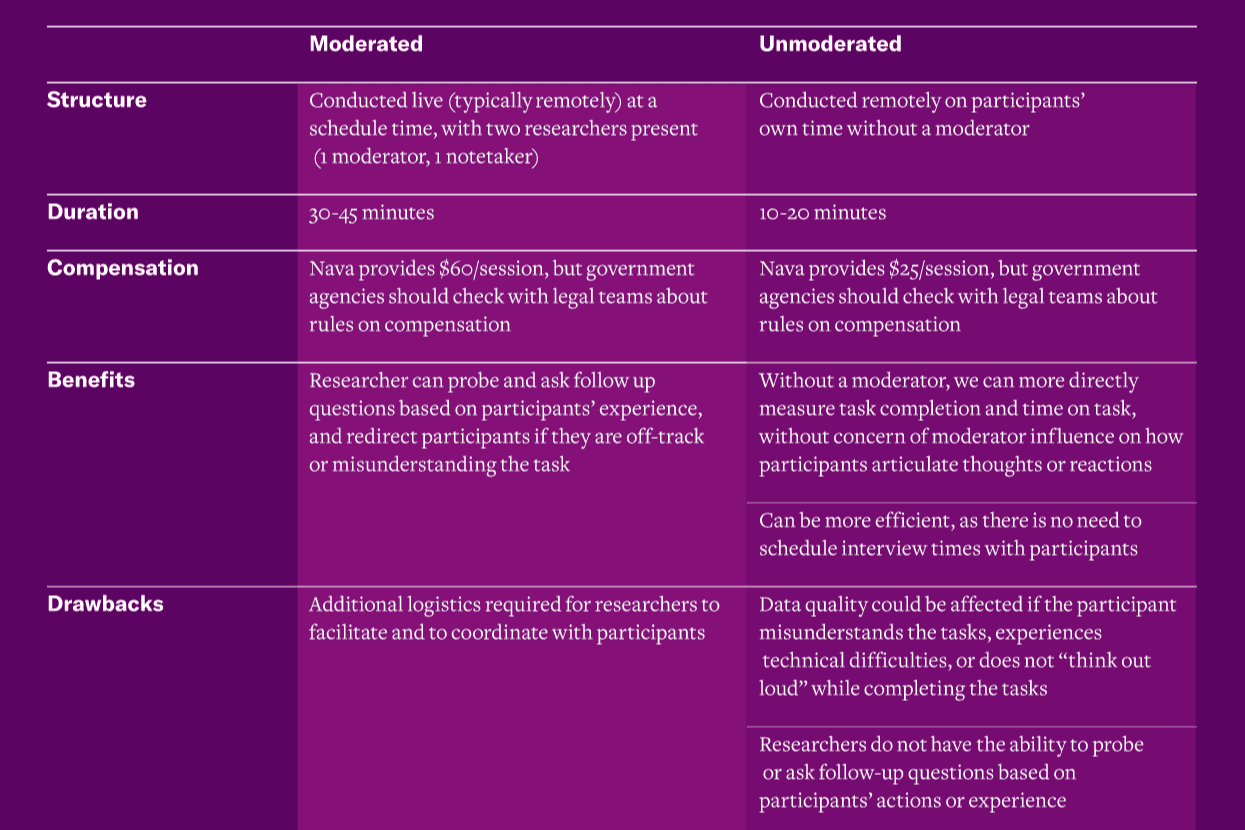

Usability tests can be moderated or unmoderated, depending on the research goals and available resources. In a moderated test, we observe users as they work through tasks while thinking out loud, allowing us to hear feedback in real-time and ask follow up questions. For example, we might ask a user to locate something in the navigation bar, and describe their thought process as they search through the navigation options.

In an unmoderated test, we share a link to a website or prototype with tasks for participants to complete on their own, without a researcher or moderator present. However, unmoderated tests are typically recorded so moderators can review them later on. This method will allow us to look at task times, success rates, and drop-off points.

Key differences: moderated vs. unmoderated tests

Prepare your materials

Start by writing a research brief, a short document that details your research questions, methodology, and timeline. Use this template as a starting point.

Circulate your research brief for consensus building and approvals with key stakeholders.

If your test is moderated, draft your interview script, using this template as a starting point.

If your test is unmoderated, draft your test plan, using this template as a starting point.

Circulate your interview script or test plan to the same group of key stakeholders for feedback and revisions.

Recruit participants

It’s important to recruit a diverse and representative cohort of participants who reflect the characteristics of your target population. Nava’s “Get things right from the beginning with equitable research recruitment” is a great resource that includes practical tips on doing so. Equitable and representative recruitment matters not only for each individual test, but also over time: if you’re conducting monthly or quarterly usability tests, you’ll want to consider both the characteristics of participants for each test, as well as the characteristics of the longer term cohort.

How to recruit test participants

Develop your screening criteria. Think about what criteria are important for you to consider in ensuring that your participant pool is both equitable and representative. This might mean prioritizing participants with a specific education level, age, or location, or participants that have (or have not) had a particular experience with your program, product, or service.

Start by looking at your existing participant pool. Recruitment can be time-consuming, and we often have many more people who respond to a recruitment call than we can speak with. Before spinning up a new recruiting initiative, evaluate if you have existing participants from previous initiatives that meet your screening criteria. Nava uses Ethnio to track participants and manage recruiting, but you might also have a list of potential participants in a spreadsheet or customer relationship management system like Salesforce.

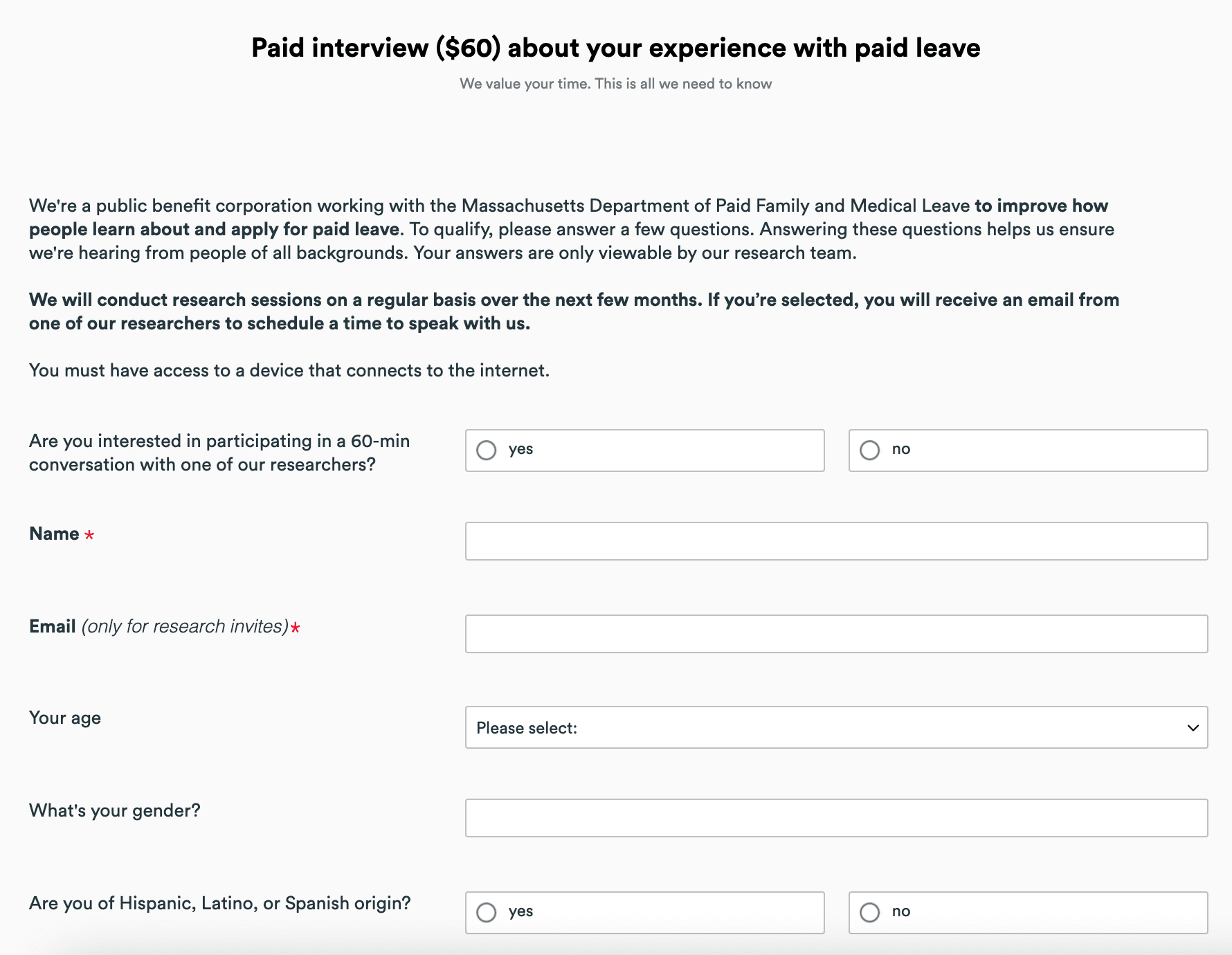

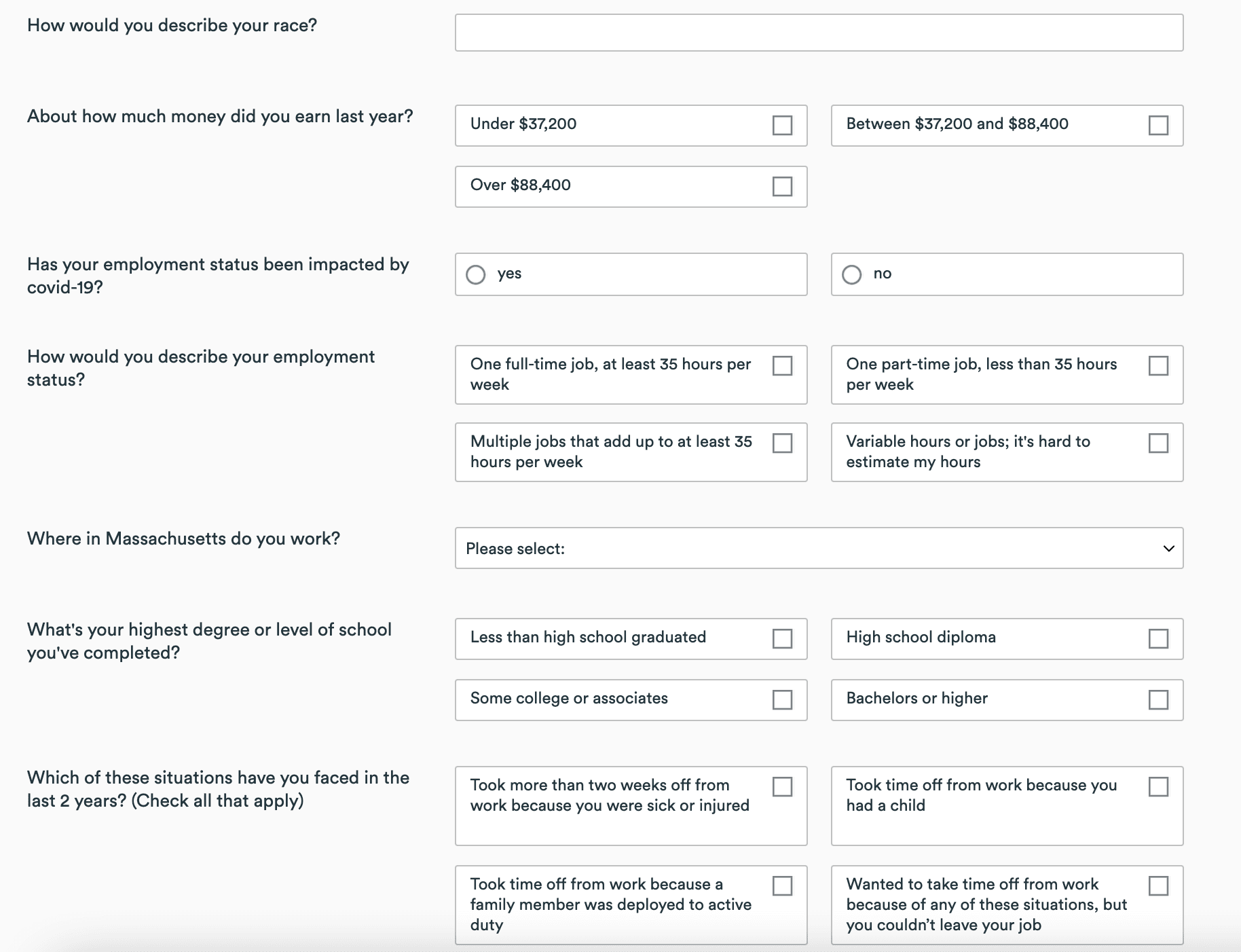

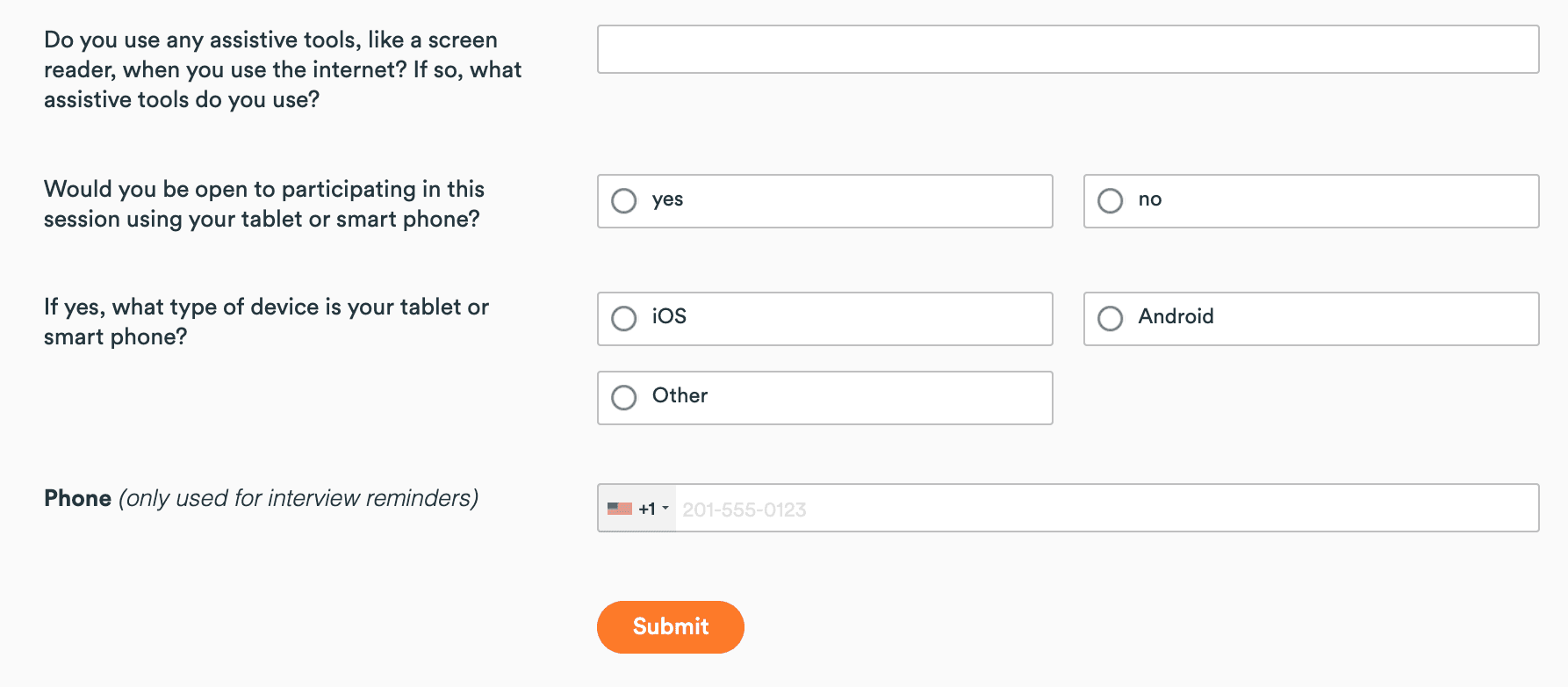

If you need to recruit additional participants, create a screener to gather demographic information. Nava also uses Ethnio to create and send screeners, but they can also be created through tools like Google Forms, Microsoft Forms, or Formstack. Your screener should ask for participants’ name and email address, as well as whichever demographic details are relevant for your test. Below is an example of a screener that we use for recruiting participants for our work with the Commonwealth of Massachusetts’ Paid Family and Medical Leave (PFML) program.

Determine your recruiting channel(s). In our work with PFML, we use the following methods:

Survey respondents: People who use paidleave.mass.gov to apply for leave and employers who manage leave for their organization can fill out a short survey that asks about their experience using the site and if they’re ok with us contacting them. This pool is always growing, so it’s a great source for potential research participants who have recently interacted with the program.

Craigslist: When targeting participants who have not directly engaged with your product, program, or service, or when looking for specific participant characteristics, recruiting through ads on Craigslist has proven to be a useful method. It has also contributed to a more diverse participant pool.

Personal or professional networks: You, your research team, or your partners may be able to leverage your own connections to recruit potential participants. For example, in PFML we have successfully recruited current and prospective state employees with whom to conduct research via the Commonwealth’s Human Resources Division.

Community-based organizations: For example, these might include local non-profits, community groups, or legal aid organizations. In Nava’s work with PFML, we have successfully recruited by working with local community-based organizations like the Coalition for Social Justice and Greater Boston Legal Services.

Send an initial email to see if participants are interested in participating in research. This email should include a demographic screener, taking into consideration your screening criteria. You can use this template as a starting point.

At this point in the process of preparing for your usability test, you should have:

Decided whether to conduct a moderated or unmoderated test

Written a research brief

Written an interview guide (if moderated) or a test plan (if unmoderated)

Developed screening criteria

Recruited a pool of potential participants

In the next toolkit, we’ll cover how to screen and schedule participants from your pool, implement your test, and follow up on your findings.

Written by

Design Manager

Senior Designer/Researcher